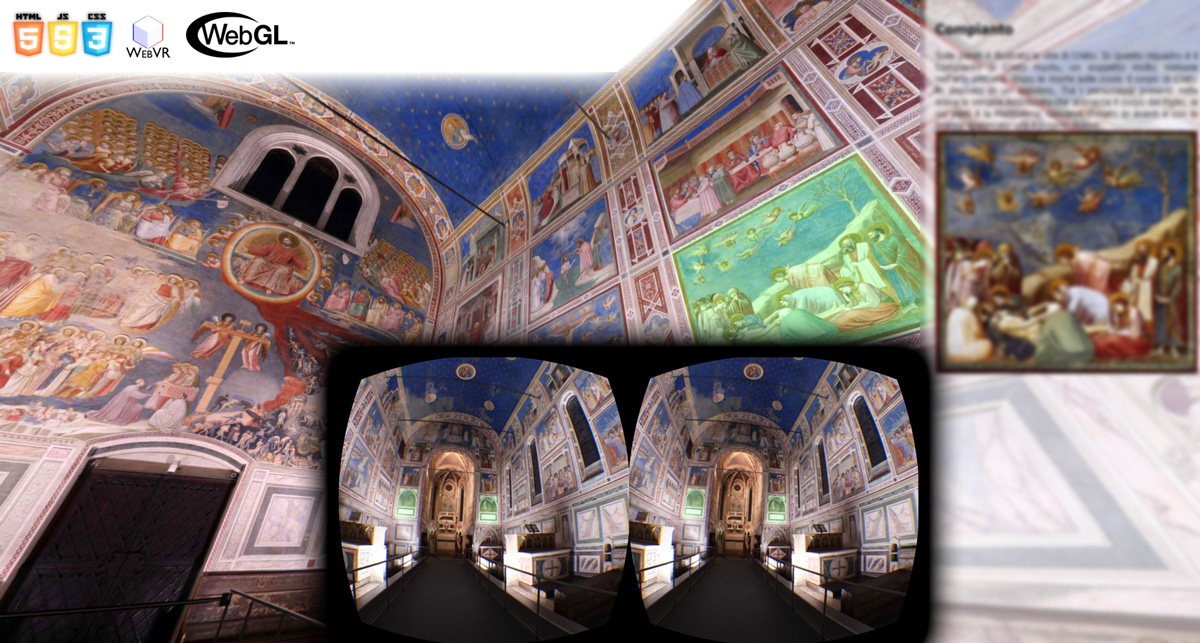

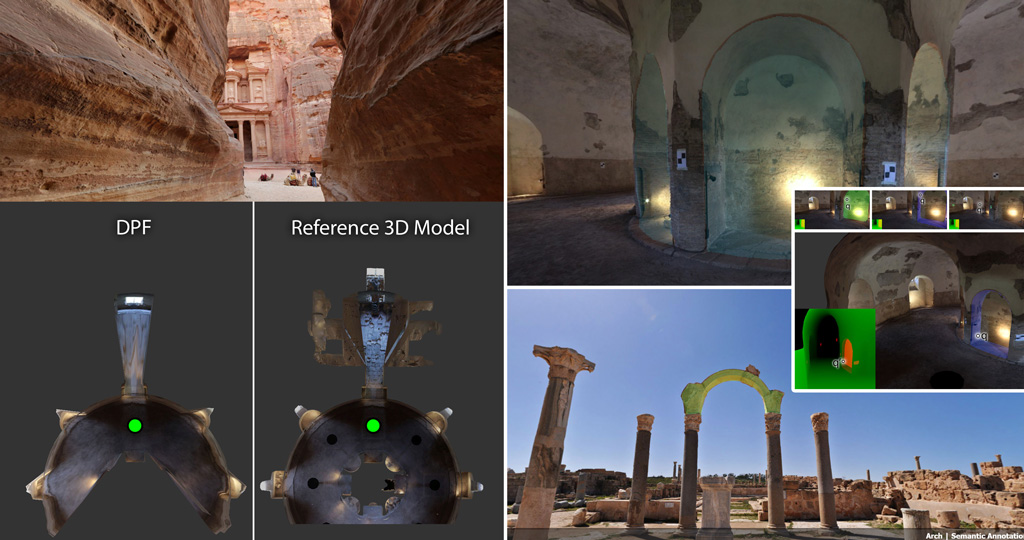

The DPF (Depth Panoramic Frame) is a compact data model developed by B. Fanini and E. d’Annibale (CNR ISPC, ex ITABC) for omnidirectional image-based data transport (panoramic images and videos) targeting VR dissemination, suitable for online and local contexts (or web-apps). It offers full sense of presence and scale within VR fruition by restoring a 3D space without transmitting original dataset (e.g.: very large point-clouds) using instead an egocentric optimized encoding. Original work was presented during 14th EUROGRAPHICS Workshop on Graphics and Cultural Heritage.

@inproceedings{fanini2016framework,

title={A framework for compact and improved panoramic VR dissemination},

author={Fanini, Bruno and d'Annibale, Enzo},

booktitle={Proceedings of the 14th Eurographics Workshop on Graphics and Cultural Heritage},

pages={33--42},

year={2016},

organization={Eurographics Association}

}

The DPF data transport and DPF Library offer:

- Correct VR stereoscopic fruition, by restoring a 3D virtual space on the fly

- Encoding/Decoding of omnidirectional data, Semantic queries and 3D Restoration are all GPU-based

- Streaming of compact and optimized image-based data (no geometry)

- Optimal detail for HMDs, minimizing data transmission

- Fast and easy semantic enrichment by non-professional users

- Support for video-streams

- Support for real-time Depth-of-Field effects

- Easy event handling on semantically enriched areas (see examples)

- Easy deployment on a webpage

- Easy integration with external devices

Getting Started with DPF Library

The DPF is now being integrated into the new ATON framework, as a new component called XPF. Check out examples here.

You can still find the old, deprecated javascript implementation of DPF Library on GitHub here: https://github.com/phoenixbf/dpf.